Problem: Design a large scale collaborative editor like Google Docs, OneNote, Word online etc.

Design: Before going into the details of components, services, DBs, we are going to address the key question which is how to solve the concurrency issue. First thing which comes to mind is "Locks" but this will introduce too much delay. The editor with locking mechanism is not even usable if 5 (may be lesser) or more people are working on the same document.

Till now we have understood that we need to get a lock free optimistic concurrency control mechanism here. There are two approaches:

- Operational Transformation: The client can send raw events (operations) character by character to the server (like character M inserted at position 3, character O deleted from position 10 etc.). This is also called operational based sync.

- Differential Sync: The client can send the delta (difference) between server version and local copy. The server will not know of the exact operation performed at the client in this case.

Let's go in depth of every approach:

Operational Transformation(OT): Let's understand the problem first using an example:

In this scenario, the users start with an initial string “abc”, User1 intends to insert the character “Y” at the index “2” and User2 intends to insert the character “Z” at the index “2”. Both of the users execute the operation locally, resulting in the strings “abYc” and “abZc” respectively. After local execution, they both send their operations to each other and execute it straight away; subsequently resulting in two different strings “abZYc” and “abYZc”.

This problem illustrates how naively sending operations between clients will result in inconsistent states between users. The solution to this problem is to transform both the operations with respect to each other, and then apply it to their counterparts.

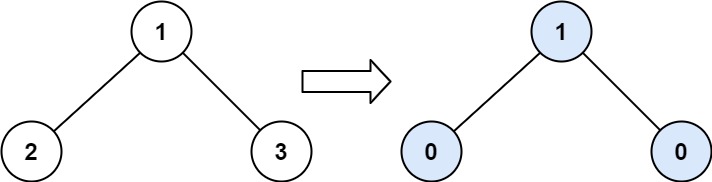

Now that we have understood the problem, let's understand how OT solves this synchronization problem. Here is the mathematical function:

The math form is little tough to understand, but it is best explained graphically using the OT Diamond illustration:

Two clients perform two operations simultaneously (operation a and b) at their respective ends. These two operations can be send to transform function that will generate two new operations called (a’ and b’).

When b’ is applied to a on one client end, and a’ is applied to b on another client end, both the clients will come to the same consistent state. Let's use the above example to understand the approach better:

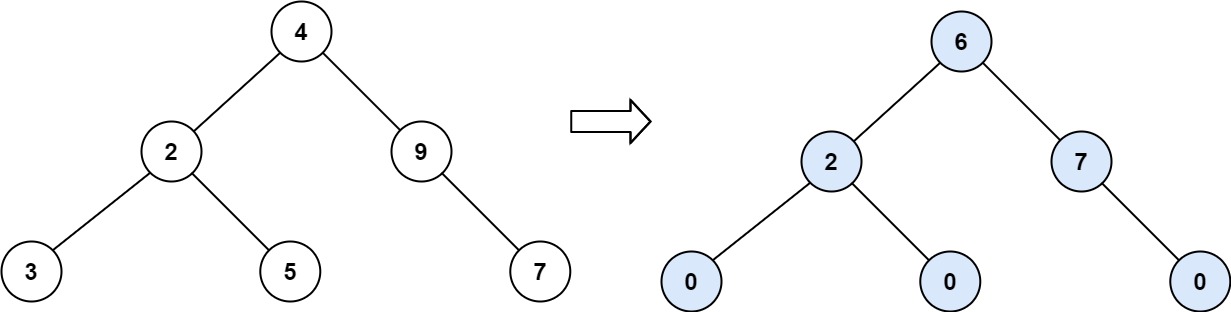

Our original string was ABC (when everything was in sync).

- User 1 performed operation a (insert Y at index 2) resulting in the state: abYc.

- User 2 performed operation b (insert X at index 2) resulting in the state: abXc.

Note the timestamp is maintained for both the operations to stick to the laws of Optimistic Locking. Based on the timestamps we will come to know operation 'a' happened before operation 'b'.

When we pass both these operations and their order to the transform function, it will result in two new operations.

- operation a‘ : insert Y at index 2

- operation b’ : insert X at index 3

User 1: Apply b’ after applying a. It will first insert Y at index 2 to the original string (ABC), and then insert X at index 3 to the intermediate string (abYc), getting the resultant string as abYXc.

User 2: Apply a’ after applying b. It will first insert X at index 2 to the original string (ABC), and then Y at index 2 to the intermediate string (abXc), getting the resultant string as abYXc.

You can see after applying the transformed operations, both clients arrive at the synchronous state.

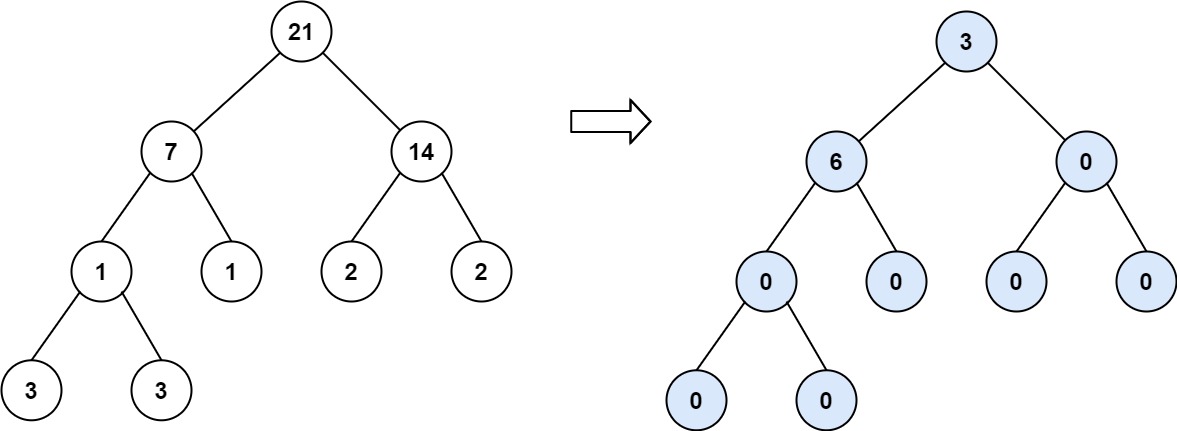

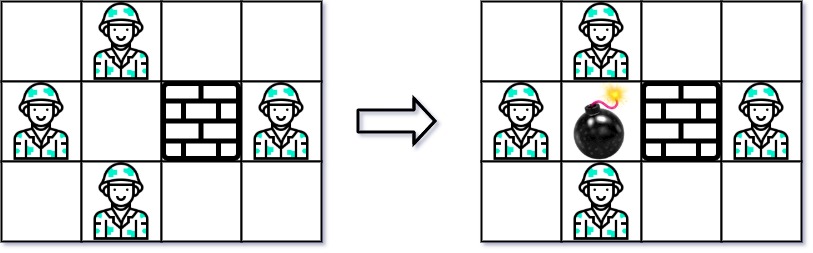

The above diamond problem is pretty much the only thing OT algorithm can solve at the fundamental level. Given two upper sides of the diamond, OT can transform them wrt each other and complete the remaining bottom two parts but with the scale different problems arise. Let's add just one more concurrent problem and consider the client - server architecture. The server is the single source of truth and clients can edit the document concurrently and send the changes to the server to sync.

Consider a case when a client performs two consecutive operations a and b. It sends the operations one by one to the server. The server, meanwhile, got operation c from another client working concurrently elsewhere.

So, now server has to first merge a and c because those two operations are branched from the last synced server state. The Server can apply transformation to operation a and c, and figure out a’. It can then apply a’ on top of changes from c and get a consistent state. So far so good.

Ideally, at this point, the server should send c’ to the client to make the client state consistent. But just when it was about to do so. Operation b arrives, the server is in fix. It has nothing to transform operation b to because it was derived from a, and not from any server synced state. To fix this the server uses a technique called Bridging.

To incorporate changes of operation b onto the server side, we need to somehow find a way to transform "b". But we only have one side of the diamond. This is where server has to create a bridge to form the other side of the diamond (as shown by the dotted green line).

As it turns out, the bridge is nothing but transformation of operation c against a which the server knows how to perform because a and c both are derived from the same last synced server state. Once the bridge is established, the upper two sides of diamond is complete for operation b. So now, the server can compute b’ (the lower side of the diamond). Apply it on its local copy (the one formed after applying a’ earlier) and make everything consistent at its end. The server now has applied operation c, a and b at its end successfully.

However, the client is yet to be in sync. It needs a operation which it can apply to its own state to make his end consistent. This is achieved as shown below:

The server will transform c against the composition of a and b, producing c’. It sends c’ back to the client which applies to its own state and become consistent.

Although the above demonstrated approach will work functionally to any number of client and server operations, and make everything consistent but it has huge scalability issues mainly because the server is required to do all the heavy lifting; server is performing all the transformations. Server is required to create these bridges and for that it has to hold onto every intermediate operations. In the above example, after transforming a and c against each other, and getting a’, the server cannot forget c’ because it could be further needed to create bridges (like in case of operation b’s bridge).

This sounds like a trivial problem initially but imagine if there are hundreds of operation pumping in from a client and hundreds of un-synchronized operations pending on the server end. The server has to remember all intermediate bottom half OT operations for bridging which is practically impossible.

To solve this problem we can follow the rules given below:

- All the operations that client sends to the server needs to be branched out from a known state in the server history. Otherwise, the server will discard the operation. So, operation b in the above example will be discarded because it was not branched from a known server state.

- Client will only send one operation at a time, and wait for the acknowledgement from the server before sending the next one.

- Server will broadcast the new changes it has applied to its own state to all the clients as soon as it applies it.

Of-course, simply rejecting the divergence will make the whole collaborative system all but useless. So, the server offloads all the heavy lifting to the client. We don’t want the server to maintain intermediate states of thousands (or millions) of clients. The server offloads all the compound OT work to the client. Server will only perform simple OT in which there will never be any need to remember any intermediate state.

The client will keep the track of its own local unsync operations and also listen for new server operations that happened after its own last synced version. The client has to keep track of just one server, since there’s never going to be any more than one (logical) server. This does simplify things from the server’s perspective but also the client will not do double the work as illustrated below:

- To being with, client performs an operation a from the last synced server state. It starts building the bridge until the operation is synced with the server. It sends operation a to the server, and waits.

- Client performs another operation (b) locally but as the rules says, it must not send it to the server until it receives acknowledgment from the server about operation a. So, it keeps operation b in the buffer.

- Meanwhile, at the server end, before operation a, two more operations were pending to be synced. (operation c and d). They happened before a as validated by the timestamp. Server applies c to its local copy and creates a new state. It then broadcasts c to all clients.

- Our client receives operation c which it transforms against its two local operations (a and b). It generates c’ which it adds to the bridge.

- Client performs another local operation e locally. It adds this operation to the bridge and also adds to the buffer behind b.

- Meanwhile, the server performs operation d at its end (on top of c), and broadcasts the message to all the clients. Our client receives the message, it transforms d against the composition of all its buffered operations (a, b and e), and generate d’. It adds d’ to the bridge.

- Finally, server picks up operation a from its queue, transforms it against operation c and d (these two operations were branches out from the same parent as that of operation a). Generates operation a’. It applies a’ to its local state making it in sync. Broadcasts it to every client.

- Our client will receive operation a’. It will come to know it is its own operation because with every operation client generates a unique id, which server returns back after transformation and broadcasting.

- Now, the client will pick the next operation from the buffer (operation b) transform it wrt a’ since that is now the latest server state, and send it to the server for sync, and so on.

Hopefully with this the whole OT mechanism is clear and now let's move to the second mechanism.

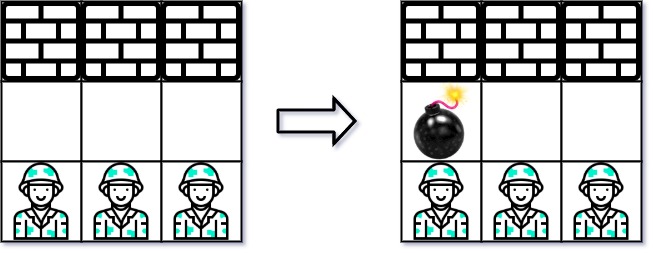

Differential Synchronization: Differential synchronization is a symmetrical algorithm employing an unending cycle of background difference (diff) and patch operations. Below is an idealized data flow diagram for differential synchronization. It assumes two documents (say Client Text and Server Text) which are located on the same computer with no network.

The following walk-through starts with Client Text, Common Shadow and Server Text all being equal. Client Text and Server Text may be edited at any time. The goal is to keep these two texts as close as possible with each other at all times.

- Client Text is diffed against the Common Shadow.

- This returns a list of edits which have been performed on Client Text.

- Client Text is copied over to Common Shadow. This copy must be identical to the value of Client Text in step 1, so in a multi-threaded environment a snapshot of the text should have been taken.

- The edits are applied to Server Text on a best-effort basis.

- Server Text is updated with the result of the patch. Steps 4 and 5 must be atomic, but they do not have to be blocking; they may be repeated until Server Text stays still long enough.

The process now repeats symmetrically in the other direction. This time the Common Shadow is the same as Client Text was in the previous half of the synchronization, so the resulting diff will return modifications made to Server Text, not the result of the patch in step 5.

Here's an example of actual data flow.

a. Client Text, Common Shadow and Server Text start out with the same string: "Macs had the original point and click UI."

b. Client Text is edited (by the user) to say: "Macintoshes had the original point and click interface." (edits underlined)

c. The Diff in step 1 returns the following two edits:

@@ -1,11 +1,18 @@

Mac

+intoshe

s had th

@@ -35,7 +42,14 @@

ick

-UI

+interface

.

d. Common Shadow is updated to also say: "Macintoshes had the original point and click interface."

e. Meanwhile Server Text has been edited (by another user) to say: "Smith & Wesson had the original point and click UI." (edits underlined)

f. In step 4 both edits are patched onto Server Text. The first edit fails since the context has changed too much to insert "intoshe" anywhere meaningful. The second edit succeeds perfectly since the context matches.

g. Step 5 results in a Server Text which says: "Smith & Wesson had the original point and click interface."

h. Now the reverse process starts. First the Diff compares Server Text with Common Shadow and returns the following edit:

@@ -1,15 +1,18 @@

-Macintoshes

+Smith & Wesson

had

i. Finally this patch is applied to Client Text, thus backing out the failed "Macs" -> "Macintoshes" edit and replacing it with "Smith & Wesson". The "UI" -> "interface" edit is left untouched. Any changes which have been made to Client Text in the mean time will be patched around and incorporated into the next synchronization cycle.

The method described above is the simplest form of differential synchronization, but it will not work on client-server systems since the Common Shadow is, well, common. In order to execute on two systems, the shadow needs to be split in two and updated separately. Conceptually this is the same algorithm.

Client Text and Server Shadow (or symmetrically Server Text and Client Shadow) must be absolutely identical after every half of the synchronization. This should be the case since "(v1 Diff v2) Patch v1 == v2". Thus assuming the system starts in a consistent state, it should remain in a consistent state. Note that the patches on the shadows should fit perfectly, thus they may be fragile patches, whereas the patches on the texts are best-effort fuzzy patches.

However, on a network with best-effort delivery, nothing is guaranteed. Therefore a simple checksum of Client Shadow ought to be sent along with the Edits and compared to Server Shadow after the patches have been applied. If the checksum fails to match, then something went wrong and one side or the other must transmit the whole body of the text to get the two parties back in sync. This will result in data loss equal to one synchronization cycle.

This will work but what will happen in case of network failure. In this case the client might stop synchronizing for a while until the connection times out. When the connection is restored on the following synchronization, the shadows will be out of sync which requires a transmission of the full text to get back in sync. This will destroy all changes since the previous successful synchronization. If this form of data-loss is unacceptable, a further refinement adds guaranteed delivery.

In a nutshell: Normal operation works identically to the Dual System Method described above. However in the case of packet loss, the edits are queued up in a stack and are retransmitted to the remote party on every sync until the remote party returns an acknowledgment of receipt. The server keeps two copies of the shadow, "Server Shadow" is the most up to date copy, and "Backup Shadow" is the previous version for use in the event that the previous transmission was not received.

- Normal operation: Client Text is changed by the user. A Diff is computed between Client Text and Client Shadow to obtain a set of edits which were made by the user. These edits are tagged with a client version number ('n') relating to the version of Client Shadow they were created from. Client Shadow is updated to reflect the current value of Client Text, and the client version number is incremented. The edits are sent to the server along with the client's acknowledgment of the current server version number ('m') from the previous connection. The server's Server Shadow should match both the provided client version number and the provided server version number. The server patches the edits onto Server Shadow, increments the client version number of Server Shadow and takes a backup of Server Shadow into Backup Shadow. Finally the server then patches the edits onto Server Text. The process then repeats symmetrically from the server to the client, with the exception that the client doesn't take a backup shadow. During the return communication the server will inform the client that it received the edits for version 'n', whereupon the client will delete edits 'n' from the stack of edits to send.

- Duplicate packet: The client appears to send Edits 'n' to the server twice. The first communication is processed normally and the response sent. Server Shadow's 'n' is incremented. The second communication contains an 'n' smaller than the 'n' recorded on Server Shadow. The server has no interest in edits it has already processed, so does nothing and sends back a normal response.

- Lost outbound packet: The client sends Edits 'n' to the server. The server never receives it. The server never acknowledges receipt of the edit. The client leaves the edits in the outbound stack. After the connection times out, the client takes another diff, updates the 'n' again, and sends both sets of edits to the server. The stack of edits transmitted keeps increasing until the server eventually responds with acknowledgment that it got a certain version.

- Lost return packet: The client sends Edits 'n' to the server. The server receives it, but the response is lost. The client leaves the edits in the outbound stack. After the connection times out, the client takes another diff, updates the 'n' again, and sends both sets of edits to the server. The server observes that the server version number 'm' which the client is sending does not match the server version number on Server Shadow. But both server and client version numbers do match the Backup Shadow. This indicates that the previous response must have been lost. Therefore the server deletes its edit stack and copies the Backup Shadow into Shadow Text (step 4). The server throws away the first edit because it already processed (same as a duplicate packet). The normal workflow is restored: the server applies the second edit, then computes and transmits a fresh diff to the client.

- Out of order packet: The server appears to lose a packet, and one (or both) of the lost packet scenarios is played out. Then the lost packet arrives, and the duplicate packet scenario is played out.

- Data corruption in memory or network: There are too many potential failure points to list, however if the shadow checksums become out of sync, or one side's version number skips into the future, the system will reinitialize itself. This will result in data loss for one side, but it will never result in an infinite loop of polling.

Now that we have understood the both the approaches. Let's go for the system design based on OT (Google docs uses OT) now:

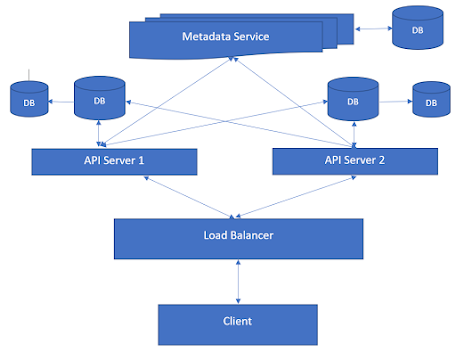

One thing to notice here is this is a real time operations where clients need to send the data to server and server also needs to send the data to clients so we are going to use web sockets here like we used in our What'sApp design. Obviously giving the load we would be needing a number of servers. Here we are using API gateway instead of load balancers as one request may result into multiple API calls which we don't want client to call instead client will just hit the API Gateway and in one call get all the results needed. For now our system looks like below:

We can safely assume that whenever a client loads a document, a web socket connection has been established. You can assume that server stores [user id] and [Server id, connection-id] mapping into cache and also it stores doc-id to List of user-ids into the cache. Here is our Server or Session Server looks like:

Our Session server mainly have three services:

- Document Update Service: This will apply the OT and save the document. It will also store the version history to DB. It will then call the below Group Operation Service with the operation.

- Group Operation Service: This service first get list of active user-ids given the document id and then it either queue the Operation and user id or call the below Send Operation service asynchronously for all the user ids and pass the operation.

- Send Operation Service: This will get the Server id and connection id using the user id and then just pass the operation to web socket. Web Socket pass the operation to client.

Other than main service Session Service. there could be more services like:

- Import / Export

- Comments (We have to use NoSQL DB here as comments data could be large)

- Permissions

That's all about the system design of Google docs or any collaborative editor. Please put your thoughts in the comments section if you see improvements here or have any query.