Problem: Design a distributed cloud storage system like Amazon S3 or Microsoft Azure Blob Storage. We need to take care of mainly following requirement:

- Durability

- Availability

- Multitenancy

- Scalability

Design: Instead of jumping directly to design, let's start with the very basic design where there are clients and just one server which is exposing the APIs and also writing the files / data into hard disk so our initial design looks like:

- A single server can only serve certain number of requests.

- Number of i/o are limited.

- Parallelization is limited otherwise HDD will choke.

To solve this problem what we can do is we can scale the servers horizontally. Basically we can have multiple replicas of same server so with this our design looks like:

This will solve some scalability problems as now it can handle more requests and also can store more data, but the problem here is one user has to interact with only one server but the client request will go to load balancer and load balancer has to redirect the request to correct server.

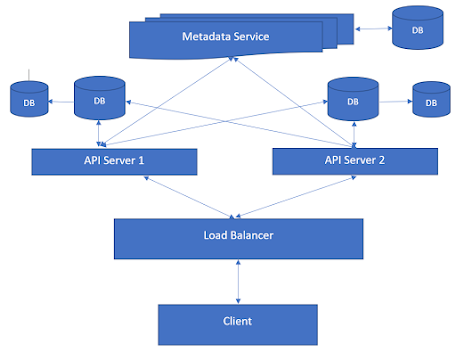

How will Load Balance know which Server to go to? We can use sticky sessions, we can use hash based load balancing but sticky sessions has its own problems. To resolve this problem, we can separate the API servers and the DB servers / HDD Servers and we can have a metadata service which will tell which files is uploaded into which server. With this here is how our design looks like:

That means we need to horizontally scale our Metadata Servers and also we need to have replicas of DBs. An interesting point here is we want to take backup of DBs to different regions so that if one region is down, still client's request can be served to achieve the target. While replicating the files/data to different regions we need to follow strong consistency as we can't depend on asynchronous process. What if data is written to primary server but before the data is written to replica servers the primary server crashes. The whole file got lost. Our durability target can't be achieved. Here is the design looks like with these modifications:

Now we need to see how DB replication will happen? DB server itself can initiate the process of replication to other DBs but DB server will know where to replicate data. How API Server will come to know which DB server to hit when primary DB is down? All these responsibility we can give to our Metadata Service/Server only. To scale we just need to add more API servers and DBs.

Now with this understanding let's jump to actual design. Let's first understand an important component of this design which is Cluster Manager. This component actually manages the whole cluster / data center. Here are it's responsibilities:

- Account Management

- Disaster Recovery

- Resource tracking

- Policies, Authentication and Authorization

Let's have a look what happens when a new account has been created:

- User sends a create account request to Cluster Manager.

- Cluster Manager collects the necessary info, make DB entries.

- Cluster Manager creates an URL for the root folder, make an entry of the URL to DNS with the IP address of Load Balancer for the further requests.

- Returns the root folder URL to User / Client.

Here is flow looks like:

Now let's go to the next step that is read and write files etc. Now let's look at the complete design:

Before double clicking into every component, let's understand the flow of say writing the file:

- Client gets the IP of load balancer from DNS using the root folder URL.

- Client send the write file request to load balancer.

- Load balancer redirects the request to one of the API server.

- API Server first check the auth related info using the Cluster Manager DB.

- API Server get the hash number using the file Id.

- API Server query the cache to know in which partition server, we should write the file using the hash number.

- Basically we can use range based distribution say 0-50 will go to 1st partition server, 50 - 100 will go to 2nd partition server and so on.

- API Server redirect the request to Partition Server.

- With every partition server a stream server is attached. Partition Server redirects the request to stream server to actually write the content.

That's all about the flow of write, we can have similar flow of read. Let's double click into what a stream server is; A stream server is a layer over linked list of file servers where only head is empty. The file managers are say a layer over hard disks. This is maintained by Stream Manager. Stream Manager also can add more Stream Servers and notify Partition Manager to add more partition server to attach these stream server.

Stream manager also holds the info of in which file server within a stream server a particular file exist. Stream Manager can also tell the stream server where to replicate the files.

Having Partition Manager can result into many advantages:

- Parallelization

- Make multiple same read requests into one request

- Caching etc.

At API Server also we can maintain cache so that if the same read request happened multiple times, it can just serve from the cache itself for optimization purpose.

No comments:

Post a Comment